In 2025, social media bots are more advanced than ever, mimicking human behavior and influencing online interactions on platforms like Facebook and Twitter. While some bot accounts serve useful functions, many are used to spread misinformation, manipulate opinions, and even engage in stock market manipulation.

For SaaS companies, e-commerce businesses, and customer service teams, bots create fake accounts, distort analytics, and flood social media posts with nonsensical content. Some malicious bots steal profile information and deceive real users, making it hard to distinguish between human accounts and programmed profiles.

This post reveals the hidden dangers of social bots, their impact on businesses and organizations, and strategies to detect and protect against these threats.

What Are Social Media Bots in 2025?

Social media bots are automated social media accounts programmed to mimic human behavior on platforms like Facebook, Twitter, and other social media networks. These bots can engage in online interactions, generate social media posts, and even participate in discussions with real users.

How Do Social Media Bots Work?

Most bot accounts are designed to perform automated tasks, such as:

- Liking, sharing, and commenting on posts to amplify engagement.

- Following and messaging users to simulate real human interactions.

- Spreading misinformation or malicious content to manipulate public opinion.

- Engaging in spam campaigns, phishing scams, or even identity theft.

These automated accounts can be controlled by companies, organizations, or even cybercriminals, making it hard to tell whether you’re interacting with a real person or a bot.

Types of Social Media Bots in 2025

There are several types of social bots, each with different purposes:

- Engagement Bots

- Designed to increase popularity by liking, commenting, and following real users.

- Often used by influencers and brands to artificially boost their reach.

- Example: A bot automatically generating social media posts to make an influencer appear more active.

- Customer Support Bots

- Help businesses engage with users by answering FAQs and automating support tickets.

- Some are helpful, but malicious bots can impersonate customers and waste support resources.

- Example: A bot creating fake support tickets to slow down a company’s response time.

- Malicious Bots

- Used for spamming, spreading false information, phishing, and cyberattacks.

- Can pose as real people to trick businesses and manipulate opinions.

- Example: A bot spreading disinformation about a company to harm its reputation.

- AI-Generated User Accounts

- Platforms like Facebook and Twitter are now allowing AI-generated accounts that interact with real users.

- Some of these accounts look so real that they even have profile pictures, generic profile information, and realistic messages.

- Example: An AI-driven social media user debating in political discussions to influence public opinion.

Why Are These Bots a Growing Problem?

As AI technology improves, social bots are becoming harder to detect. Many of these accounts look just like real humans, complete with images, profile information, and human-like conversations. For businesses, this means:

- Fake accounts can flood brand pages with spam and fake engagement.

- Bots can manipulate analytics, making it difficult to track real interactions.

- Malicious bots can create disinformation campaigns, leading to PR nightmares.

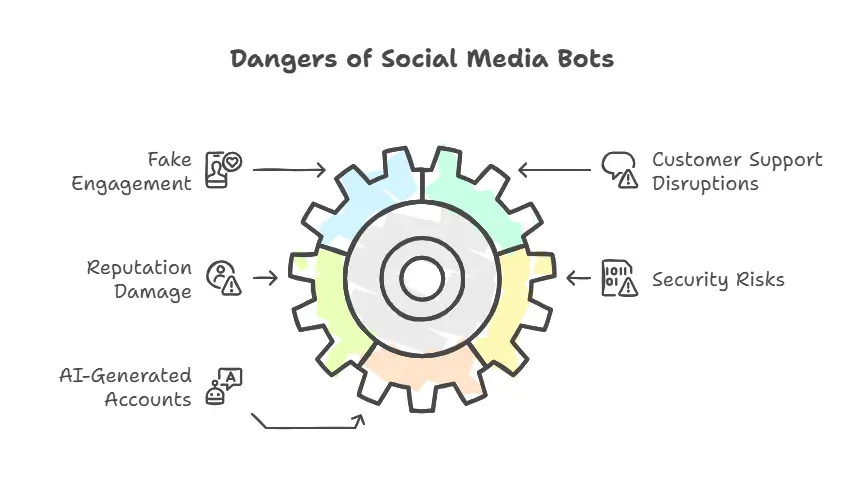

The Hidden Dangers of Social Media Bots

While social media bots can automate online interactions and improve engagement, they also pose serious risks for businesses, social media platforms, and real users. From spreading misinformation to manipulating opinions, these bot accounts create problems that are harder to detect than ever.

A. Fake Engagement and Misleading Analytics

Businesses rely on social media posts to gauge audience interest, but social bots distort the numbers. These automated accounts artificially boost likes, comments, and shares, making it difficult to measure real engagement.

💡 Example:

An e-commerce company launches a marketing campaign on Facebook and sees a surge in likes and shares. However, after analyzing the data, they realize that a significant portion of the engagement came from fake accounts and bots, rather than real people interested in their product.

🚨 Why This Is a Problem:

- Inflated engagement leads to misleading analytics.

- Wasted advertising budgets targeting bot accounts instead of real users.

- False sense of popularity that doesn’t translate into real sales or conversions.

B. Customer Support Disruptions

Many businesses use AI-driven customer support bots to engage with customers. But malicious bots can mimic human behavior, overwhelming customer service teams with fake queries and spam.

💡 Example:

A SaaS company’s chatbot receives thousands of support messages, but most of them are from automated accounts programmed to waste time. This clogs up the system and slows responses for real customers who need help.

🚨 Why This Is a Problem:

- Customer service teams struggle to identify real support requests.

- Bots waste time and resources, delaying responses to actual customers.

- Frustrated users may leave negative reviews, damaging brand reputation.

C. Reputation and PR Nightmares

Bot accounts don’t just manipulate engagement; they also spread malicious content and false information about companies and individuals. Some businesses even use social bots to attack competitors.

💡 Example:

A competing e-commerce business deploys fake accounts to leave hundreds of 1-star reviews on a rival’s page. These bots spread misinformation, making the competitor look unreliable to potential customers.

🚨 Why This Is a Problem:

- Fake reviews can destroy a company’s reputation.

- Malicious bots can manipulate opinions, making a brand look bad.

- Businesses need to spend time and money detecting and reporting fake activity.

D. Security Risks and Identity Theft

Not all social media bots are designed for engagement—some are created for fraud, phishing, and identity theft. These automated accounts can steal profile information, messages, and even financial data.

💡 Example:

A bot impersonates a human account and sends phishing messages to social media users, tricking them into sharing login credentials or credit card details.

🚨 Why This Is a Problem:

- Hackers use bots to steal private information from real users.

- Fake accounts impersonate employees or brands to trick customers.

- Cybercriminals manipulate opinions to deceive users into making bad financial decisions (like stock market manipulation).

E. The Rise of AI-Generated User Accounts

Platforms like Facebook, Twitter, and Instagram are now allowing AI-generated users—automated accounts that look and act like real people. These accounts have profile pictures, language patterns, and human-like interactions, making them nearly impossible to detect.

💡 Example:

A bot-driven conversation in a political discussion influences public opinion, making it difficult for real users to differentiate between humans and programmed accounts.

🚨 Why This Is a Problem:

- AI-generated accounts can be used to spread disinformation.

- Fake conversations manipulate discussions and amplify false narratives.

- Businesses and governments struggle to detect who is real and who is a bot

How to Detect and Fight Against Social Media Bots

Now that we’ve uncovered the dangers of social media bots, the next step is understanding how to detect and protect your business from these threats. Whether you’re managing social media platforms, handling customer interactions, or monitoring brand reputation, identifying bot accounts early is crucial.

A. How to Spot Social Media Bots

Most bot accounts follow specific patterns that make them detectable. Here are key signs to look for:

1. Generic or AI-Generated Profile Information

- Many bot accounts have profile pictures that look fake, AI-generated, or stolen from real users.

- Their profile information is often generic, with vague descriptions or random characters.

💡 Example: A bot’s profile description says: “Lover of life. Tech enthusiast. Dreamer.” No real details about location, job, or personal interests.

2. High Activity with Low Engagement Quality

- Bots are programmed to like, share, and comment on social media posts at an unnatural speed.

- Their comments often contain nonsensical content, repetitive phrases, or generic responses.

💡 Example: A business posts an update, and several accounts comment, “Great post!” or “I love this!”—but none of them actually discuss the content.

3. Suspicious Language and Interaction Patterns

- Bots struggle to mimic human behavior in conversations.

- Their language patterns may include broken sentences, repetitive phrases, or mismatched responses.

💡 Example: A social bot replies to a product inquiry with:

- User: “How long does delivery take?”

- Bot: “This product is amazing! Best service ever!” (irrelevant response)

4. Unusual Following-to-Follower Ratio

- Many bot accounts follow thousands of users but have very few followers themselves.

- Others may have inflated follower counts but low engagement.

💡 Example: An account follows 10,000 users but only has 150 followers, signaling automated behavior.

B. Strengthening Customer Support and Social Media Management

Since malicious bots can disrupt customer interactions, businesses must strengthen their social media and customer service operations. Here’s how:

1. Use AI-Based Detection Tools

- Platforms like Twitter, Facebook, and Instagram have built-in tools to detect fake accounts, but they’re not foolproof.

- Third-party AI security tools can help identify and block bot accounts.

Recommended tools:

✅ Bot Sentinel – Identifies and flags suspicious social bots.

✅ ShieldSquare – Helps businesses detect and prevent automated social media accounts.

2. Train Customer Service Teams to Spot Bots

- Customer support teams should be trained to recognize fake customer inquiries.

- Implement CAPTCHA and other verification methods to filter out bots.

💡 Example: If an e-commerce support agent gets multiple similar inquiries in a short time, it could be bot-driven spam.

3. Set Up Engagement Filters

- Use keyword and pattern recognition to automatically block nonsensical content.

- Restrict new accounts from posting links to reduce malicious content spread by bots.

💡 Example: Many bot accounts share spam links—setting up filters can automatically flag or remove these.

C. Protecting Your Brand and Customer Data

With social bots spreading disinformation, businesses must take proactive steps to protect their reputation.

1. Monitor Brand Mentions

- Regularly track mentions of your business to catch fake reviews, false information, or malicious content early.

- Use tools like Google Alerts and Mention to detect bot-driven attacks.

💡 Example: A SaaS company finds a sudden wave of negative reviews that all use similar wording—thi is likely a bot attack.

2. Strengthen Cybersecurity Measures

- Implement multi-factor authentication (MFA) to prevent bot-driven account takeovers.

- Regularly update security settings on social media platforms to prevent automated attacks.

💡 Example: Hackers use bots to brute-force login credentials—MFA can block these attacks.

3. Report and Block Suspicious Bots

- Social media platforms allow businesses to report and remove bot accounts.

- Encourage users to report fake accounts impersonating your brand.

💡 Example: A Twitter bot is using your business name to spread malicious content—reporting it prevents further damage.

The Future of Social Media Bots – What’s Next?

The world of social media bots is rapidly evolving, and in 2025, these automated accounts are more sophisticated than ever. As AI technology improves, the line between real humans and bot accounts continues to blur, creating new challenges for businesses, social media platforms, and customer service teams.

So, what’s next for social bots, and how will they impact online interactions, public opinion, and security?

A. Bots Will Become Even More Human-Like

AI-driven bots are now capable of mimicking human behavior so well that they can participate in real discussions, generate social media posts, and even create personalized responses.

💡 Example:

A bot account could join a conversation about a trending topic, responding with opinions, emotions, and even humor, making it nearly impossible to tell if it’s a real person or not.

🚨 Why This Matters:

- Businesses will struggle to distinguish real users from bots.

- Social bots could manipulate opinions more effectively by blending into conversations.

- Fake accounts could be used for fraud, spam, and misinformation at an unprecedented scale.

B. AI-Generated User Accounts Will Become the Norm

Facebook, Twitter, and other platforms are now allowing AI-generated accounts that look and act like real people. These automated social media accounts come with profile pictures, generic profile information, and even a history of interactions to appear authentic.

💡 Example:

A Facebook user could unknowingly engage with an AI-generated account that shares false information, influencing their beliefs and decisions.

🚨 Why This Matters:

- Manipulating public opinion will become easier, leading to misinformation campaigns.

- Businesses will find it harder to measure real engagement as more bot accounts flood social media platforms.

- Scammers could create AI-powered bots to impersonate real humans, leading to identity theft and fraud.

C. More Bots Will Be Used for Stock Market Manipulation

Automated accounts are already being used to influence stock prices by spreading malicious content, false information, and fake hype around financial markets. In 2025, this problem is expected to grow.

💡 Example:

A group of bots could artificially boost the popularity of a stock or cryptocurrency, tricking real investors into buying before the price crashes.

🚨 Why This Matters:

- Financial markets could become more unstable due to bot-driven manipulation.

- Investors may struggle to separate real trends from bot-generated hype.

- Regulators and financial institutions will need better tools to detect fraudulent activity.

D. Stricter Regulations May Be Introduced

Governments and social media platforms are starting to realize the threat of social bots, leading to discussions about new regulations and AI detection tools.

💡 Example:

A country introduces a law that requires platforms like Twitter and Facebook to label AI-generated user accounts to prevent misinformation.

🚨 Why This Matters:

- Businesses may face compliance requirements for dealing with bots.

- AI detection tools may become mandatory for platforms handling large user bases.

- More transparency in online interactions could reduce the spread of disinformation and fake accounts.

E. The Rise of Anti-Bot AI and Detection Tools

As bots get smarter, so do AI-powered detection tools. Businesses, governments, and cybersecurity companies are investing in bot detection AI to protect against fake engagement, scams, and fraud.

💡 Example:

A social media platform integrates real-time AI detection that scans social media posts, profile information, and engagement patterns to instantly flag bot accounts.

🚨 Why This Matters:

- Businesses will need to invest in better detection tools to prevent bot-driven fraud.

- Users may soon see AI-generated labels on profiles, helping them identify real vs. fake accounts.

- Cybersecurity companies will continue to develop new solutions to fight malicious bots.

Conclusion

The rise of social media bots in 2025 presents serious challenges for businesses, social media platforms, and real users. These automated accounts are no longer just basic programs performing automated tasks—they can now mimic human behavior, manipulate opinions, spread misinformation, and engage with real users in a way that makes them nearly undetectable.

For SaaS companies, e-commerce businesses, and customer support teams, the impact is significant. Bot accounts distort analytics, disrupt customer support, and influence public opinion—sometimes even leading to identity theft and financial fraud.

FAQs

Q1: How can I tell if a social media account is a bot?

Look for signs like:

Generic profile information (no personal details, vague descriptions).

AI-generated or stolen profile pictures.

Unusual engagement patterns (posting too frequently or responding instantly).

Repetitive, nonsensical content in messages or comments.

A very high following-to-follower ratio (e.g., follows thousands but has few followers).

Using AI-based bot detection tools can also help identify fake accounts.

Q2: How do social media bots affect businesses?

Social bots can:

Distort engagement metrics, making it hard to measure real customer interactions.

Overwhelm customer support teams with fake inquiries.

Spread misinformation or fake reviews, damaging brand reputation.

Steal profile information and attempt identity theft.

Manipulate opinions about products, services, or competitors.

Q3: Are all social media bots bad?

Not all bots are harmful. Some automated accounts help businesses by:

Managing customer support inquiries (e.g., AI-powered chatbots).

Automating content posting and scheduling social media posts.

Monitoring trends and gathering data for research.

However, malicious bots can spread misinformation, manipulate discussions, and engage in fraud, making it essential to detect and regulate bot activity.

- About the Author

- Latest Posts

Gaurav Nagani was the Founder of Desku, an AI-powered customer service software platform.

- Email Management: Best Strategies, Tools & Tips for SaaS and Ecommerce

- Shared Inbox Guide: Definition, Benefits, Tools & Best Practices 2025

- LivePerson Pricing Exposed: What They Don’t Show You on Their Website

- Automate Customer Support with AI A Practical Guide

- Desku vs UsePylon: Which One Scales Better for Startups?